Practical - 1

Practical 1

Aim: Data Preprocessing using sci-kit learn python library

Theory:

What is Data Preprocessing?

Data preprocessing is a data mining technique that is used to transform the raw data into a useful and efficient format with the use of some preprocessing technics like data cleaning, feature scaling, encoding, etc.

Why it is needed? What is the impact on the output of the model?

Data preprocessing is crucial in any data mining process as it directly impacts the success rate of the project. This reduces the complexity of the data under analysis as data in the real-world is unclean.

Data is said to be unclean if it is missing attribute, attribute values, contain noise or outliers, and duplicate or wrong data. The presence of any of these will degrade the quality of the results.

Data Preprocessing Techniques:

Standardization :

Standardization rescales data to have a mean of 0 and a standard deviation of 1. Standardization comes into the picture when features of input data set have large differences between their ranges.

Normalization

Normalization is the process of scaling individual samples to have unit norm. It is a technique often applied as part of data preparation for machine learning. The goal of normalization is to change the values of numeric columns in the dataset to use a common scale, without distorting differences in the ranges of values or losing information.

Encoding

Usually, features are not always continuous, they appear as categorical in textual type.

For example, the Country from which a person belongs [ India, USA ], Gender [ Male, Female ] Such features can be encoded as integers for machines to process them.

OneHotEncoder helps us in converting categorical variables to features that can be used with scikit-learn estimators. It transforms each categorical feature with n_categories possible values into n_categories binary features, with one of them 1, and all others 0.

Discretization

Sometimes it is necessary to transform a list of continuous values into bins. This is achieved by KBinsDiscretizer.By default, the output is one-hot encoded into a sparse matrix and this can be configured with the encode parameter.

Imputation of missing values

In real-world datasets, we often find many missing values. This can be because of various reasons — human error, sensor failure, or different data type issues. The basic strategy people prefer is discarding all the rows containing missing values. But this reduces data quality and we may lose important information. Hence we need to find suitable strategies to deal with them and impute the missing values.

The SimpleImputer class provides basic strategies for imputing missing values. Missing values can be imputed with a provided constant value, or using the statistics of each column in which the missing values are located. This class also allows for different missing values encodings.

Dataset Description:

Variable | Definition | Key |

survival | Survival | 0 = No, 1 = Yes |

pclass | Ticket class | 1 = 1st, 2 = 2nd, 3 = 3rd |

sex | Sex | |

Age | Age in years | |

sibsp | # of siblings / spouses aboard the Titanic | |

parch | # of parents / children aboard the Titanic | |

ticket | Ticket number | |

fare | Passenger fare | |

cabin | Cabin number | |

embarked | Port of Embarkation | C = Cherbourg, Q = Queenstown, S = Southampton |

1. import necessary packages.

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import MinMaxScaler

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

from sklearn.preprocessing import LabelEncoder

2.import database file

3.creating training and testing database and apply KNN before processing

x=df[['Pclass','SibSp','Parch','Fare']]

y = df['Survived']

X_train,X_test,Y_train,Y_test = train_test_split(x,y,test_size=0.2)

knn=KNeighborsClassifier(n_neighbors=5)

knn.fit(X_train,Y_train)

accuracy_score(Y_test,knn.predict(X_test))

Accuracy : 0.5842696629213483

4.Extracting dependent and independent variable

x= df.iloc[:, :-1].values

y= df.iloc[:, 8].values

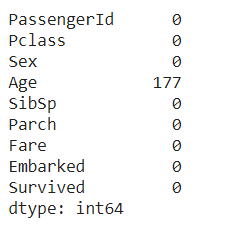

5.check for a null value

df.isnull().sum()

here Age has 177 null value so we have to handle the missing value

6.handling missing data(Replacing missing data with the mean value)

imputer= SimpleImputer(missing_values =np.nan, strategy='mean')

imputer= imputer.fit(x[:, 3:4])

x[:, 3:4]= imputer.transform(x[:, 3:4])

7.Encoding Categorical data

le = LabelEncoder()

x[:,2]= le.fit_transform(x[:,2])

x[:,7]= le.fit_transform(x[:,7])

8.Apply Normalization

scaler = MinMaxScaler()

norm_df = scaler.fit_transform(x[:,3:4])

x[:,3:4]=norm_df

norm_df = scaler.fit_transform(x[:,6:7])

x[:,6:7]=norm_df

9.check accuracy after preprocessing the data

knn=KNeighborsClassifier(n_neighbors=5)

knn.fit(X_train,y_train)

accuracy_score(y_test,knn.predict(X_test))

Accuracy : 0.7247191011235955

Comments

Post a Comment